mercutio:

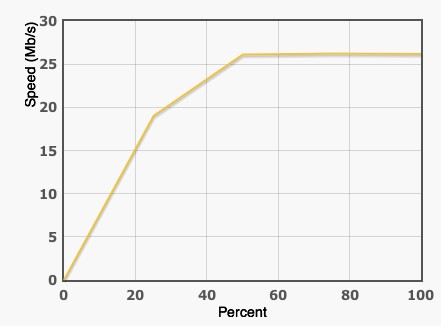

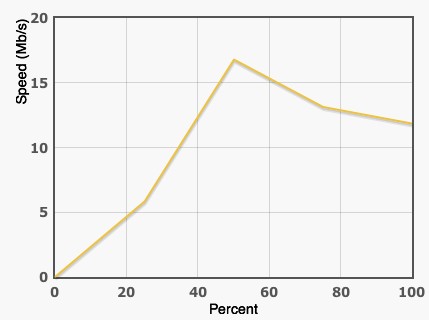

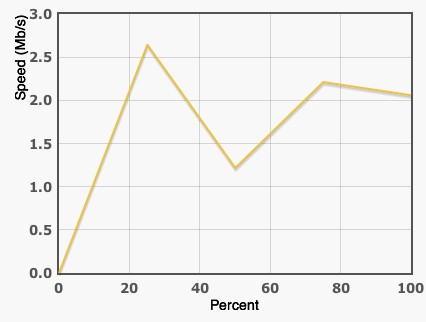

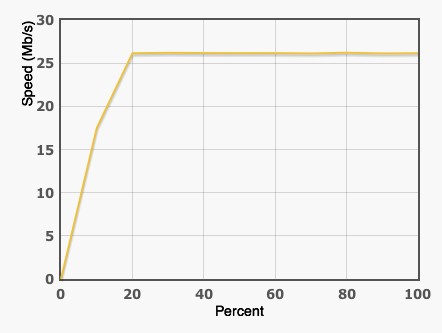

i dunno why everyone still seems to think it's delay that effects bandwidth on high latency connections. it's packet loss. if you have 0% packet loss you can get line rate to overseas. if you have even minor packet loss it can severely degrade performance.

for the same level of packet loss, the less latency the better it'll handle it.

but with modern tcp improvements like cubic congestion control it's not hard to do 100 megabit+ to the other side of the world.

yes you are correct

Cool little calculator for those who haven't seen it.

http://wand.net.nz/~perry/max_download.php