|

|

|

my router restarted during night time and at my surprise the latency is now back to normal:

any explanation from the Team?

I've never been able to replicate the issue on my connections - can anyone else affected please see if they are now fixed?

Cheers - Neil G

Please note all comments are from my own brain and don't necessarily represent the position or opinions of my employer, previous employers, colleagues, friends or pets.

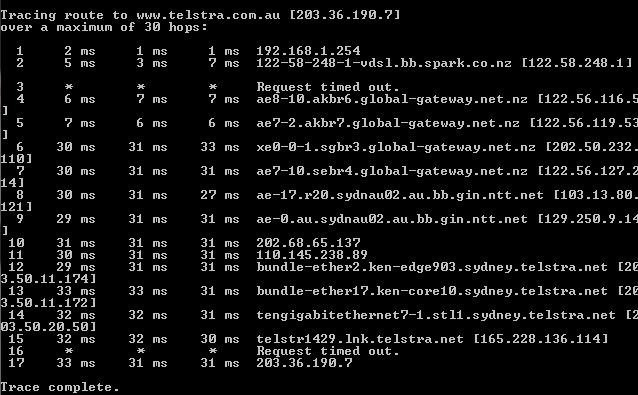

The Spark connection here is still affected.

Tracing route to www.telstra.com.au [203.36.148.7]

over a maximum of 30 hops:

1 1 ms 1 ms 1 ms home.gateway.pace.net [192.168.1.254]

2 1 ms 1 ms 1 ms home.gateway.pace.net [192.168.1.254]

3 18 ms 29 ms 30 ms 125-239-244-1-adsl.bb.spark.co.nz [125.239.244.1]

4 * * 31 ms mdr-ip24-int.msc.global-gateway.net.nz [122.56.116.6]

5 30 ms 30 ms 31 ms ae8-10.akbr6.global-gateway.net.nz [122.56.116.5]

6 31 ms 31 ms 31 ms ae2-6.tkbr12.global-gateway.net.nz [122.56.127.17]

7 53 ms 53 ms 54 ms xe0-0-4.sebr3.global-gateway.net.nz [122.56.127.90]

8 54 ms 54 ms 54 ms 122.56.119.86

9 53 ms 62 ms 54 ms ae-17.r20.sydnau02.au.bb.gin.ntt.net [103.13.80.121]

10 54 ms 53 ms 53 ms ae-0.au.sydnau02.au.bb.gin.ntt.net [129.250.9.14]

11 54 ms 54 ms 72 ms 202.68.65.137

12 173 ms 173 ms 173 ms Bundle-Ether50.chw-edge903.sydney.telstra.net [110.145.238.89]

13 177 ms 178 ms 177 ms bundle-ether2.ken-edge903.sydney.telstra.net [203.50.11.174]

14 175 ms 175 ms 175 ms bundle-ether17.ken-core10.sydney.telstra.net [203.50.11.172]

15 187 ms 187 ms 187 ms bundle-ether12.win-core10.melbourne.telstra.net [203.50.11.123]

16 184 ms 184 ms 184 ms tengigabitethernet7-1.win22.melbourne.telstra.net [203.50.80.162]

17 186 ms 186 ms 186 ms telstr745.lnk.telstra.net [139.130.39.114]

18 * * * Request timed out.

19 186 ms 186 ms 186 ms 203.36.148.7

And on 2Degrees

Tracing route to www.telstra.com.au [203.36.190.11]

over a maximum of 30 hops:

1 <1 ms <1 ms <1 ms 192.168.1.1

2 30 ms 30 ms 41 ms 69.7.69.111.static.snap.net.nz [111.69.7.69]

3 * * * Request timed out.

4 64 ms 64 ms 63 ms 4.56.69.111.static.snap.net.nz [111.69.56.4]

5 64 ms 64 ms 64 ms 5.56.69.111.static.snap.net.nz [111.69.56.5]

6 64 ms 64 ms 64 ms BE-108.cor01.syd11.nsw.VOCUS.net.au [114.31.192.84]

7 65 ms 64 ms 64 ms bundle-100.bdr01.syd11.nsw.vocus.net.au [114.31.192.81]

8 64 ms 64 ms 64 ms Bundle-Ether12.ken-edge903.sydney.telstra.net [203.27.185.57]

9 66 ms 67 ms 65 ms bundle-ether17.ken-core10.sydney.telstra.net [203.50.11.172]

10 64 ms 64 ms 65 ms tengigabitethernet7-1.stl1.sydney.telstra.net [203.50.20.50]

11 64 ms 65 ms 64 ms telstr1429.lnk.telstra.net [165.228.136.114]

12 * * * Request timed out.

13 65 ms 67 ms 66 ms 203.36.190.11

Yep mine is still affected. As hio77 mentioned, the issue is NOT fixed just that geektahiti's ip changed with the router reset and got given an ip in an unaffected range.

Talkiet:

I've never been able to replicate the issue on my connections - can anyone else affected please see if they are now fixed?

Cheers - Neil G

Any updates you can give from Spark or if you've heard anything from your international team? Still experiencing this high latency..

Latency to telstra seems normal after a few restarts - I guess I got given an IP in an unaffected range?

However still getting consistently high latency with blizzard services namely WoW so seems there's still a routing issue there?

jspk:

Latency to telstra seems normal after a few restarts - I guess I got given an IP in an unaffected range?

However still getting consistently high latency with blizzard services namely WoW so seems there's still a routing issue there?

Think this is the first i'm hearing of it affecting another route other than telstra.

Would need to check out trace on an affected IP, it's possible they are using telstra as their carrier and thus also affected.

#include <std_disclaimer>

Any comments made are personal opinion and do not reflect directly on the position my current or past employers may have.

Talkiet:

I've never been able to replicate the issue on my connections - can anyone else affected please see if they are now fixed?

Cheers - Neil G

Here's an easy way to test - https://www.telstra.net/cgi-bin/trace

If the geolocation data is correct, Telstra seems to be routing affected ranges via Hong Kong.

1 gigabitethernet3-3.exi1.melbourne.telstra.net (203.50.77.49) 0.359 ms 0.268 ms 0.240 ms

2 bundle-ether3-100.exi-core10.melbourne.telstra.net (203.50.80.1) 2.613 ms 1.416 ms 2.117 ms

3 bundle-ether12.chw-core10.sydney.telstra.net (203.50.11.124) 14.234 ms 14.285 ms 13.109 ms

4 bundle-ether1.oxf-gw11.sydney.telstra.net (203.50.6.93) 15.859 ms 14.534 ms 15.109 ms

5 bundle-ether1.sydo-core03.sydney.reach.com (203.50.13.98) 15.359 ms 14.535 ms 14.983 ms

6 i-0-1-0-15.sydo-core04.bi.telstraglobal.net (202.84.222.54) 15.733 ms

7 i-0-4-0-29.1wlt-core02.bx.telstraglobal.net (202.84.136.209) 157.576 ms 157.598 ms 155.872 ms

8 i-92.eqla01.bi.telstraglobal.net (202.84.253.82) 155.401 ms

9 unknown.telstraglobal.net (134.159.63.171) 155.329 ms 159.828 ms 237.356 ms

10 206.82.129.90 (206.82.129.90) 154.149 ms 153.953 ms 153.906 ms

11 ae0-10.lebr7.global-gateway.net.nz (202.50.232.41) 154.900 ms 154.702 ms 154.777 ms

12 xe8-0-5-0.tkbr12.global-gateway.net.nz (210.55.202.193) 156.526 ms 156.200 ms

13 ae6-10.akbr6.global-gateway.net.nz (122.56.127.18) 155.075 ms

14 ae10-10.tkbr12.global-gateway.net.nz (202.50.232.29) 159.322 ms

15 * ae6-10.akbr6.global-gateway.net.nz (122.56.127.18) 161.825 ms

hashbrown:

6 i-0-1-0-15.sydo-core04.bi.telstraglobal.net (202.84.222.54) 15.733 ms

7 i-0-4-0-29.1wlt-core02.bx.telstraglobal.net (202.84.136.209) 157.576 ms 157.598 ms 155.872 ms

8 i-92.eqla01.bi.telstraglobal.net (202.84.253.82) 155.401 ms

9 unknown.telstraglobal.net (134.159.63.171) 155.329 ms 159.828 ms 237.356 ms

I would Suggest -

Not Hong Kong, 1 Wilshire is largely the default DC for SC based traffic hitting the US for all SC traffic from NZ/AUS. I was there last week in fact. The issue is Telstra is preferring the network At Equinix over the network likely being advertised by spark to them in Sydney. Of course if they "peered" this would not happen as peering sessions are always preferred over transit sessions.

https://www.coresite.com/data-centers/locations/los-angeles/one-wilshire

https://www.equinix.com/locations/united-states-colocation/los-angeles-data-centers/

9 unknown.telstraglobal.net (134.159.63.171) 155.329 ms 159.828 ms 237.356 ms

Could potentially be at least three parties involved.

Month later and issue still not fixed and no updates from spark ![]()

jspk:

Month later and issue still not fixed and no updates from spark

um, you are not still expecting this to get fixed at this point are you? If its been a month I would suggest its now in the too hard bucket and the case has been quietly closed, after all you do have communication with the end point, its just sub-optimal routing. If you want optimised routing I would suggest picking a service provider with an open peering policy.

noroad:

jspk:

Month later and issue still not fixed and no updates from spark

um, you are not still expecting this to get fixed at this point are you? If its been a month I would suggest its now in the too hard bucket and the case has been quietly closed, after all you do have communication with the end point, its just sub-optimal routing. If you want optimised routing I would suggest picking a service provider with an open peering policy.

Surely this latency/sub-optimal routing is being noticed by others and is something they'd want to fix? Looks pretty bad when other ISP's aren't having this issue.

Do I just have to wait for this to be fixed overseas?

Anyone who had this issue have a workaround for this (vpn etc.)?

|

|

|