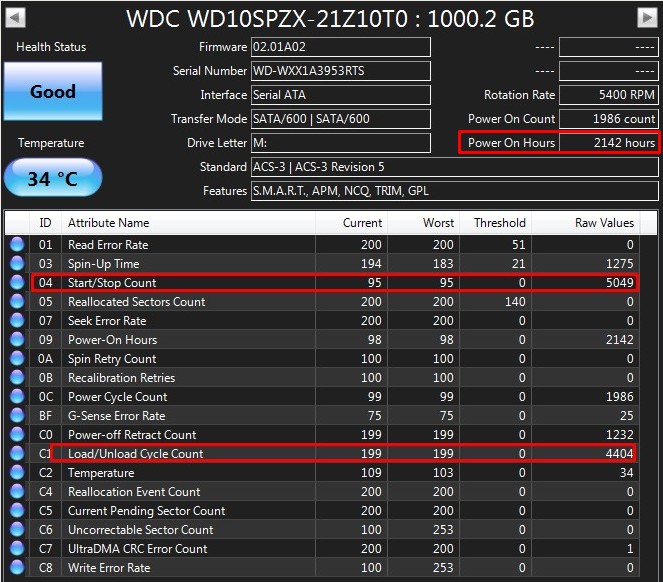

Figured this was worth posting - pulled from a (near) brand new Acer. I can see these drives will all die an early death.

Edit:

Stats from identical drive - what gives?

|

|

Power on count of 42 and start/stop count of 5831 don't make sense.

Maybe CrystalDiskInfo doesn't like the drive controller.

The main one to watch is the Reallocated Sector Count which is still zero.

That will bring down the health rating and show the drive is really on the way out.

paulgr:

Power on count of 42 and start/stop count of 5831 don't make sense.

Maybe CrystalDiskInfo doesn't like the drive controller.

The main one to watch is the Reallocated Sector Count which is still zero.

That will bring down the health rating and show the drive is really on the way out.

WD drives with High Load Cycle are notorious for dying early. The actuator wears out leading to mechanical failure.

paulgr:

Power on count of 42 and start/stop count of 5831 don't make sense.

Maybe CrystalDiskInfo doesn't like the drive controller.

The main one to watch is the Reallocated Sector Count which is still zero.

That will bring down the health rating and show the drive is really on the way out.

Toshiba MQ 2.5 series as well, from around 150,000 Load/Unload cycles they slowly grind to a halt.

Here's average 3.5" drive for comparison:

Looking at the 4 WD drives in my server:

Not sure what to make of that really, except the drive in your first post is behaving very very strangely.

I don't think these numbers are especially reliable. The 3TB red has 224 power cycles. How would it only have 117 load cycles?

allio:

Looking at the 4 WD drives in my server:

- Red 3TB: 117 load cycles in 73,081 hours

- Red 4TB: 22,025 load cycles in 33,782 hoiurs

- White label 12TB: 7,202 load cycles in 20,748 hours

- White label 14TB: 527 load cycles in 11,314 hours

Not sure what to make of that really, except the drive in your first post is behaving very very strangely.

I don't think these numbers are especially reliable. The 3TB red has 224 power cycles. How would it only have 117 load cycles?

similar here, 2TB WD Red, 85,252 hours and 1,087,287 load/unload cycles, still going fine, has 41,379 start stop counts.

openmedia:

Not that unusual for laptop class drives.

It is for a 42hr old drive. It's insanely high.

The Smart Load Cycle/Start Stop (same thing) attribute is down to 95 after just 2 days.

I'll look up the serial number, will report back....

i think you are reading to much into the results which are known to not be accurate

Indeed. I've had drives reporting healthy SMART results running into issues reading/writing sectors when tested using utilities that test by reading/writing all sectors on a HDD (and still report a healthy SMART result!).

Unless the drive is definitely performing poorly or making any kind of trouble, I'd just take a note of the SMART results as a curiosity and keep an eye on it.

If you're running Linux there are some useful utliies you can use. Some can do non-destructive read tests. However I found the best test are destructive read/write tests as they test that every write is read back excatly the same. Bad drives seem to fail these sort of tests fairly quickly.

SMART is good only as one of many tools in your toolbox. And regardless you should always be backing up!

That sounds like its idle timeout it set too low. This was a problem back when the first WD green drives were released (2009?), and I can remember having to run a little 3rd party utility called wdidle3 to increase the timeout. It would be worth trying to see if it can still set the idle timer of modern WD drive, or if there is another way to do that how.

fe31nz:

That sounds like its idle timeout it set too low. This was a problem back when the first WD green drives were released (2009?), and I can remember having to run a little 3rd party utility called wdidle3 to increase the timeout. It would be worth trying to see if it can still set the idle timer of modern WD drive, or if there is another way to do that how.

Hi thanks I'm familiar with wdiddle, and the greens.

The drive in question averages 142 cycles per hour. That extrapolates to 264,000 at 2,000hrs and 1,320,000 at 10,000hrs.....

I have a stack of maybe 30 problem drives on a shelf, many with high Load/Unload counts - but nowhere near this high (relative to power on time).

The SMART report in the second screenshot I posted is an identical drive, same firmware, but with radically different numbers - what I'd consider to be within normal range.

Why such a big difference between the two drives, I have no idea. So far it seems to be an anomaly.

Jase2985:

i think you are reading to much into the results which are known to not be accurate

this

|

|